Adversarial Machine Vision

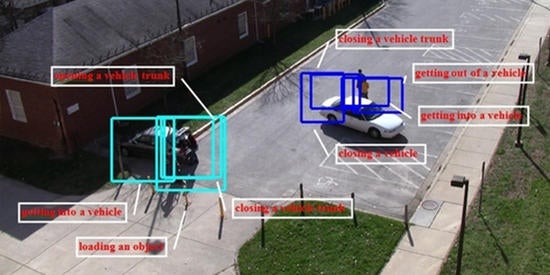

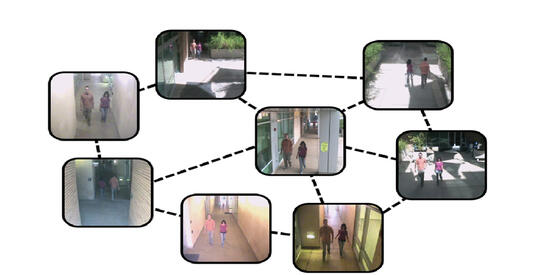

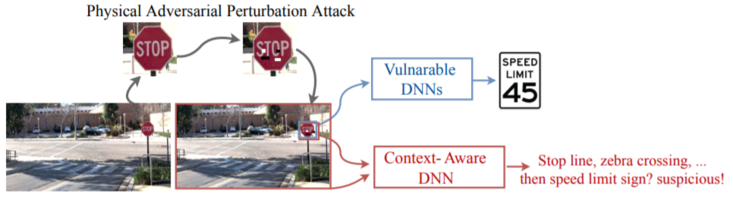

Deep Neural Networks (DNNs) are the state-of-the-art tools for a wide range of tasks. However, recent studies have found that DNNs are vulnerable to adversarial perturbation attacks, which are hardly perceptible to humans but cause mis-classification in DNN-based decision-making systems, e.g., image classifiers.The majority of the existing attack mechanisms today are targeted towards mis-classifying specific objects and activities. However, most scenes contain multiple objects and there is usually some relationship among the objects in the scene, e.g., certain objects co-occur more frequently than others.This is often referred to as context in computer vision and is related to top-down feedback in human vision; the idea has been widely used in recognition problems. However, context has not played a significant role in the design of adversarial attacks.

In our ECCV 2020 paper, we showed that state-of-the-art attacks were easily detected by context-aware detectors. We then worked on context-aware attack methods whereby the contextual relationships between objects in the scene were exploited to design better attack strategies. These methods have been published in AAAI 2022 and CVPR 2022. Temporal contextual relationships were also exploited to craft attacks against video sequences, as presented in NeurIPS 2021. We showed how language-based descriptions of an image can be used for representing context and developing effective attack and defense strategies in NeurIPS 2022 and CVPR 2021.

In another paper in NeurIPS 2022, we proposed a general method for black-box attacks by searching over an ensemble of surrogate models to estimate the victim model being attacked.

A nice summary of our work can be found Here.

This work has been supported by DARPA.