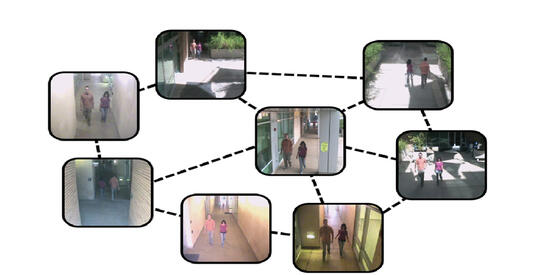

Re-identification Across indoor-outdoor Dataset (RAiD)

This person re-identification dataset was collected at the Winstun Chung Hall of UC Riverside. It is a 4 camera dataset with 2 indoor and 2 outdoor cameras. The cameras are numbered as 1,2,3 and 4 where cameras 1 and 2 are indoor while cameras 3 and 4 are outdoor. 43 people walked in these camera views resulting in 6920 images. Among the 43 persons 41 people appeared in all the 4 cameras where as person 8 is not present in camera 3 and person 34 is not present in camera 4.

Dataset

Please refer to the following article when using this dataset:

A. Das, A. Chakraborty, A. Roy-Chowdhury."Consistent Re-identification In A Camera Network". European Conference on Computer Vision, pp. 330-345, vol.8690, Zurich, 2014.

Tour20 Video Summarization Dataset

Tour20 is a video summarization dataset that is designed primarily for multi-video summarization. However, it can also be used for evaluating single-video summarization in a repeatable and efficient way. It contains 140 videos of total 6 hour 46 minutes duration that are downloaded from YouTube with creative commons license, CC-By 3.0. The dataset consists of three human created ground truth summaries for each of the videos as well as a diverse set of summary to describe the video collection of a tourist place. We also provide the shot segmentation files that indicate the shot boundary transitions of each video.

Dataset

Please refer to the following article when using this dataset:

R. Panda, N.C. Mithun, A. Roy-Chowdhury, "Diversity-aware Multi-Video Summarization" in IEEETransactions on Image Processing, 2017

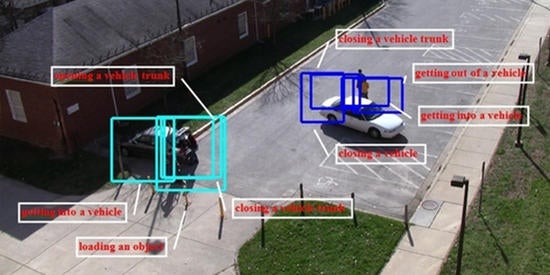

Camera Network Tracking Dataset (CamNeT)

CamNeT is a non-overlapping camera network dataset that is designed for tracking. The dataset is composed of five to eight cameras covering both indoor and outdoor scenes at University of California, Riverside. This dataset consists of six scenarios. Within each scenario are challenges relevant to lighting changes, complex topographies, crowded scenes, and changing grouping dynamics. Persons with predefined trajectories are combined with persons with random trajectories. A baseline multi-target tracking system and its results are provided.

Dataset

Please refer to the following article when using this dataset:

S. Zhang, E. Staudt, T. Faltemier, A. Roy-Chowdhury. "A Camera Network Tracking (CamNeT) Dataset and Performance Baseline". In IEEE Winter Conference on Applications of Computer Vision, Waikoloa Beach, Hawaii, January, 2015.

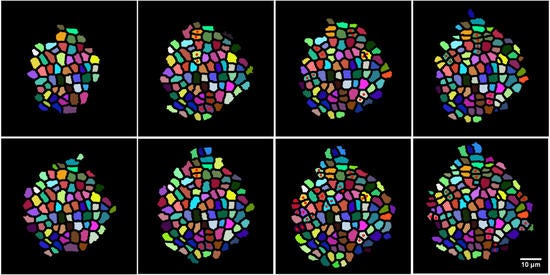

DivNet Image Dataset

The DivNet dataset contains images for around 550 object and scene categories, averaging around 1K images per category. The categories are mainly chosen from ILSVRC2016 object detection and scene classification challenge. The images for each category were originally collected from Google, Bing and Flickr. The collected images has been refined in a semi-supervised incremental sparse-coding framework, so that a high-quality image dataset can be created with limited human labeling. We release images, which were originally crawled with Creative Commons license (CC-By 3.0) for non-commercial reuse.

Dataset

Please refer to the following article when using this dataset:

N. C. Mithun, R. Panda and A. K. Roy-Chowdhury, "Generating Diverse Image Datasets with Limited Labeling" in ACM MM, Amsterdam, October, 2016.