Three papers on context-aware adversarial attacks in AAAI 2022, CVPR 2022, and NeurIPS 2021

Our recent work has shown how to develop adversarial attacks that are aware of the contextual relationships among multiple objects in a scene and presented at major conferences: NeurIPS, AAAI and CVPR.

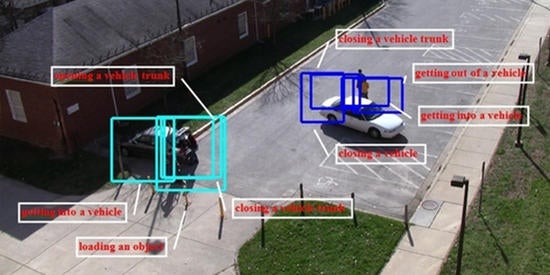

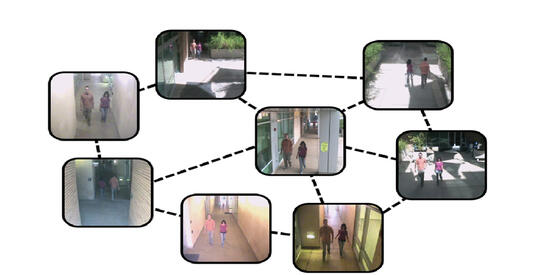

The majority of the existing attack mechanisms today are targeted toward misclassifying specific objects and activities. However, most scenes contain multiple objects and there is usually some relationship among the objects in the scene, e.g., certain objects co-occur more frequently than others. This is often referred to as context in computer vision. We have shown how to design context-aware attack methods whereby the contextual relationships between objects in the scene were exploited to design better attack strategies. These methods have been published in AAAI 2022 and CVPR 2022. Temporal contextual relationships were also exploited to craft attacks against video sequences, as presented in NeurIPS 2021.

1. In the paper at AAAI 2022, we developed an optimization strategy to craft adversarial attacks so that the objects on the victim side were contextually consistent and hard to detect using classifiers or detectors that were context-aware. In this work, we assume that the attacker could query the victim model a few times (5 or less), an assumption that was relaxed in the CVPR 2022 paper, leading to an even more effective attack.

Title: Context-Aware Transfer Attacks for Object Detection

Z. Cai, X. Xie, S. Li, M. Yi, C. Song, S. Krishnamurthy, A. Roy-Chowdhury, M. S. Asif, Context-Aware Transfer Attacks for Object Detection (AAAI), 2022

Title: Zero-Query Transfer Attacks on Context-Aware Object Detectors

Z. Cai, S. Rane, A. Brito, C. Song, S. Krishnamurthy, A. Roy-Chowdhury, M. S. Asif, Zero-Query Transfer Attacks on Context-Aware Object Detectors (CVPR), 2022

2. The NeurIPS paper shows how geometric transformations can be used to design very efficient attacks on video classification systems. Specifically, we design a novel iterative algorithm Geometric TRAnsformed Perturbations (GEO-TRAP), for attacking video classification models. GEO-TRAP employs standard geometric transformation operations to reduce the search space for effective gradients into searching for a small group of parameters that define these operations. Our algorithm inherently leads to successful perturbations with surprisingly few queries. For example, adversarial examples generated from GEO-TRAP have better attack success rates with ~73.55% fewer queries compared to the state-of-the-art method for video adversarial attacks on the widely used Jester dataset.

S. Li*, A. Aich*, S. Zhu, M. S. Asif, C. Song, A. Roy-Chowdhury, S. Krishnamurthy, Advances in Neural Information Processing Systems (NeurIPS), 2021 (* joint first authors)

These methods were developed under the Techniques for Machine Vision Disruption program within the DARPA AI Explorations umbrella.